BatMobility: Towards Flying Without

Seeing for Autonomous Drones

TL;DR: Doppler shift enables autonomous navigation in challenging environments lacking visual or geometric features.

Unmanned aerial vehicles (UAVs) rely on optical sensors such as cameras and lidar for autonomous operation. However, optical sensors fail under bad lighting, are occluded by debris and adverse weather conditions, struggle in featureless environments, and easily miss transparent surfaces and thin obstacles. In this paper, we question the extent to which optical sensors are sufficient or even necessary for full UAV autonomy. Specifically, we ask: can UAVs autonomously fly without seeing? We present BatMobility, a lightweight mmWave radar-only perception system for autonomous UAVs that completely eliminates the need for any optical sensors. BatMobility enables vision-free autonomy through two key functionalities – radio flow estimation (a novel FMCW radar-based alternative for optical flow based on surface-parallel doppler shift) and radar-based collision avoidance. We build BatMobility using inexpensive commodity sensors and deploy it as a real-time system on a small off-the-shelf quadcopter, showing its compatibility with existing flight controllers. Surprisingly, our evaluation shows that BatMobility achieves comparable or better performance than commercial-grade optical sensors across a wide range of scenarios.

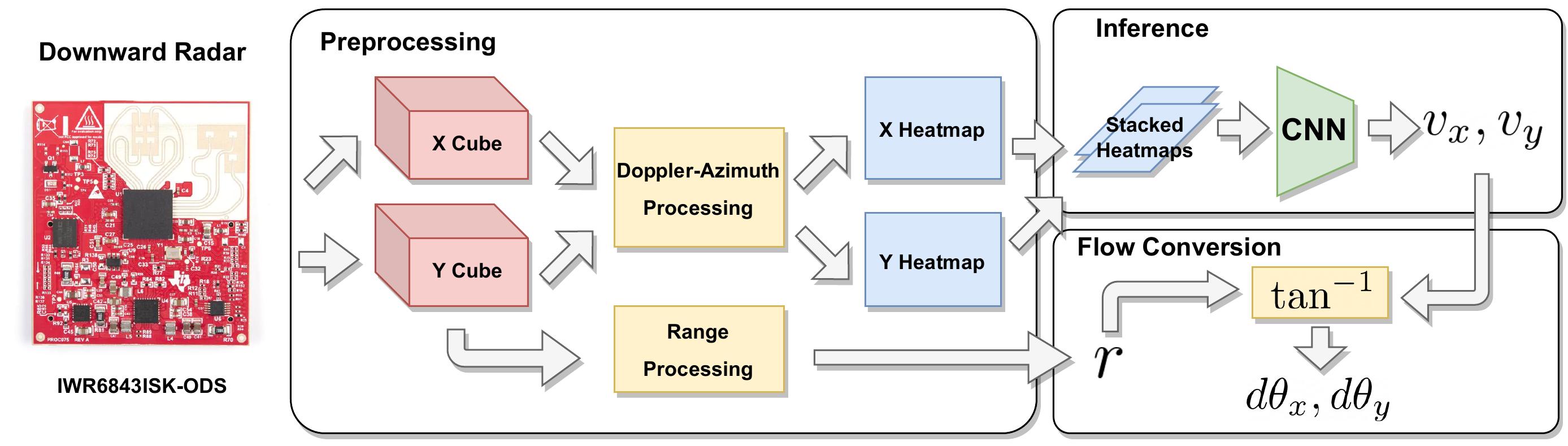

How can we stabilize a drone using only a downward facing radar? Although obtaining altitude is straightforward, ground-parallel motion is challenging. Our key insight is that at mmWave frequencies, most ground surfaces appear diffuse to radar. This means ground-parallel motion induces unique patterns in the doppler-angle plane. We can make such patterns apparent even on very low resolution antenna arrays by extending the doppler axis.

The previous simulated toy examples assume a perfectly diffuse surface. However, doppler-angle heatmaps generally appear different under various real world conditions due to specularity, multipath, noise, etc. We collect data on various real world surfaces and train a lightweight CNN to regress velocity from imperfect doppler-angle heatmaps. We also convert these to angular flow values for plug-and-play compatibility with existing flight controllers.

We compare loitering behavior of a UAV using (a) a commercial optical flow sensor and (b) BatMobility. In the left, we can see that the UAV is unable to hold its position in featureless or dark environments. In the right, BatMobility uses surface-parallel doppler shift to maintain stability in spite of the lack of visual or geometric ground features.

BatMobility enables feedback-based velocity control in harsh environments (such as in the dark). This enables key action primitives (i.e. move forward, move left, turn right, stop) for vision-free navigation in unstructured environments.

|

Emerson Sie, Xinyu Wu, Heyu Guo, Deepak Vasisht MobiSys 2024 PDF | Project Page |

@inproceedings{sie2023batmobility,

author = {Sie, Emerson and Liu, Zikun and Vasisht, Deepak},

title = {BatMobility: Towards Flying Without Seeing for Autonomous Drones},

booktitle = {ACM International Conference on Mobile Computing (MobiCom)},

year = {2023},

doi = {https://doi.org/10.1145/3570361.3592532},

}